OpenAI Just Bought the Arsonist: Why the OpenClaw Acquihire Proves the Circle Jerk is Going Vertical

Date: February 15, 2026

Event: OpenAI hires Peter Steinberger (creator of OpenClaw execution framework). YC W26 deploys autonomous agents with unconstrained write-access.

Status: The loop is closed. The generation of entropy is now vertically integrated.

Werner Vogels, Amazon CTO, coined a term at AWS re:Invent 2025 that captures the structural crisis now accelerating across enterprise software: Verification Debt.

Verification is where responsibility transfers from the system to the human.

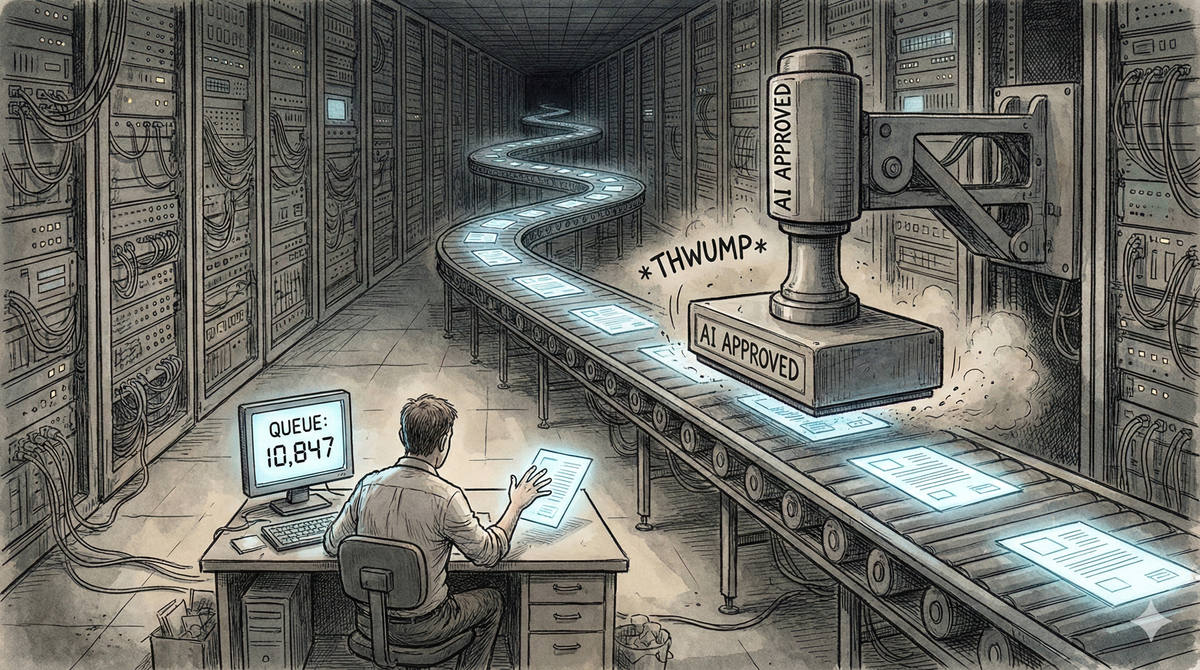

Technical Debt was bad code you wrote to ship fast. You owed the system a refactor. Verification Debt is plausible code the AI wrote instantly. You owe the system a review.

The difference is that Technical Debt was bounded by human typing speed. Verification Debt is bounded only by compute capacity, which is functionally infinite.

On February 14-15, 2026, OpenAI announced they hired Peter Steinberger, the creator of OpenClaw - the open-source agentic framework that gives AI models unconstrained write-access to enterprise systems via desktop GUI control. Steinberger isn't some indie open-source developer. He's an exited founder (PSPDFKit) already embedded in the VC ecosystem.

This isn't an acquisition. This is talent reallocation within the same incestuous funding network.

OpenClaw itself remains open-source, which makes the move even more revealing. OpenAI doesn't need to own the code. They just needed to hire the architect who understands how to integrate autonomous execution into their reasoning layer. The framework will keep spreading. The exploits will keep getting deployed. OpenAI just wanted first-mover advantage on productizing it.

The timing is exquisite. Just weeks after Y Combinator funded both the arsonist (Tensol, deploying OpenClaw with no guardrails) and the fire department (Clam, selling a "semantic firewall" to protect you from OpenClaw), OpenAI decided to bring the framework's creator in-house.

This is not a product strategy. This is vertical integration of entropy.

Securitizing Verification Debt: The Subprime Mortgage Moment

This is not hyperbole. This is pattern recognition.

In 2007, banks took toxic assets (subprime mortgages), bundled them into tranches, stamped them "AAA-rated," and sold them to pension funds as safe investments. The ratings agencies provided the mathematical blessing. The risk models said everything was fine. Right up until it collapsed.

We are doing the exact same thing with code.

We are taking thousands of unverified, machine-generated Pull Requests (subprime code), bundling them into CI/CD pipelines, stamping them "Approved via AI Agent," and pushing them into production. We are building Collateralized Debt Obligations out of stochastic code.

The math looks good on paper. The agents pass the tests. The code compiles. The velocity metrics go up and to the right. But nobody is asking the fundamental question: Who verified this?

The answer is: Nobody. The AI generated it. The CI system auto-merged it. The CISO assumes someone reviewed it.

This is February 2026. This is the exact month the tech industry started writing the bad checks that will cause the next massive infrastructure collapse.

The Physics of the Trap

Recent research quantifies the cognitive cost: verifying AI-generated outputs introduces a 6.5x latency penalty compared to performing the task manually. This is because AI generation is probabilistic. It produces "plausible" outputs, not "correct" ones. Detecting the difference requires rebuilding the entire mental model from scratch. You cannot skim AI code the way you skim human code. Every line is a potential hallucination.

Sonar's 2026 State of Code report confirms the crisis: 96% of developers do not fully trust AI-generated code, yet only 48% consistently verify it before committing. We are securitizing this debt, bundling unverified changes into production releases and hoping the interest payments (outages, breaches) do not bankrupt the company.

Why "Better Models" Won't Fix This

The inevitable venture capital counter-argument is already forming: "As reasoning models improve (GPT-5, o4, etc.), hallucination rates will drop to zero, and Verification Debt will disappear."

This is a category error.

Verification is not an accuracy problem. It is a custody problem.

Even if an agent achieves a 99.9% true-positive rate, in a compliance-bound enterprise (SOC2, FedRAMP, HIPAA), an automated action still requires a human signature. The queue is not generated by the presence of errors. The queue is generated by the process of establishing trust.

You cannot algorithmically compress a human signature.

A perfectly generated Pull Request triggers the same compliance latency as a flawed one, because the human reviewer must still:

- Understand the change

- Verify it matches the stated intent

- Confirm no regression occurred

- Assume legal liability for the merge

Verification is not a bug-hunting exercise. It is an act of legal custody.

This is why the "models will get better" defense is structurally irrelevant. The bottleneck is not hallucination frequency. The bottleneck is human attention, which remains linear and non-compressible.

The Queue Theory That Kills the Thesis

Queueing theory provides the mathematical framework to understand why this model fails.

In a system where the arrival rate (λ) of verification tasks approaches or exceeds the service rate (μ) of human verifiers, wait time approaches infinity:

W = 1/(μ − λ)

As AI tools drive λ toward infinity, the system enters permanent deadlock. The backlog of unverified changes becomes a liability, not an asset.

The throughput mismatch is structural: a single agent can generate hundreds of changes per hour. A human can responsibly verify only a handful. This is not a training problem or a tooling problem. This is physics.

The OpenAI Play: From Platform to Execution Layer

The Steinberger hire isn't about acquiring code. OpenClaw stays open-source. This is about capturing the execution layer architecture.

OpenAI already owns the reasoning layer (GPT-4o, o1, o3). By hiring the creator of the dominant agentic execution framework, they now own the integration playbook for the action layer. This is the critical move in the AI value chain:

- Layer 1: Reasoning – What should the agent do? (OpenAI already owns this)

- Layer 2: Execution – How does the agent do it? (Steinberger brings the OpenClaw playbook)

- Layer 3: Verification – Did the agent do it correctly? (This remains the human bottleneck)

The fact that Steinberger is already VC-connected (exited founder, PSPDFKit) reveals the structural truth: this isn't "talent acquisition." This is insider talent reallocation. The same funding networks that backed his previous exits are now positioning him to productize autonomous execution at OpenAI.

The open-source nature of OpenClaw makes this even more insidious. The framework keeps spreading. Startups like Tensol keep deploying it with no guardrails. And OpenAI gets to be the "responsible" player who "hires the expert" to "ensure safe deployment" while the ecosystem continues to burn.

The problem is that Layer 3 does not scale.

The Racketeering Model of Enterprise AI

Here is what the YC W26 batch teaches us about the AI tooling market:

- Sell the disease. Fund Tensol to deploy OpenClaw agents that can autonomously mutate enterprise data without security boundaries.

- Sell the probabilistic band-aid. Fund Clam to sell you a "semantic firewall" - just an LLM guessing whether another LLM's hallucination is malicious. You are putting a probabilistic bouncer at the door of a probabilistic casino.

- Sell the alert triage system. When Clam generates 5,000 false-positive alerts per day, fund another AI agent to deduplicate and prioritize the alerts.

It is an infinite loop of entropy. You are solving AI-generated problems with more AI, while the human operator drowns under the notification queue.

This is the liability amplification loop: each new AI tool claims to solve the previous one's problems, but every "solution" just adds another layer of unverified output that requires human custody.

Consider the compute architecture when you stack these tools:

- OpenClaw generates an action:

DELETE /leads/stale - Clam intercepts and runs an inference to evaluate "intent"

- Clam approves or denies

- If denied, an alert is generated

- A human engineer triages the alert

You have just paid Company B to stop Company A from destroying your infrastructure, and you are still doing the work. You have doubled the API cost, doubled the latency, introduced deadlock risk, and generated more Verification Debt.

And now, with the Steinberger hire, OpenAI is vertically integrating this loop. They own the reasoning, the execution, and soon, they will own the monitoring and the remediation.

The only thing they do not own is liability. That remains with the human.

The Warning Label for CISOs: The Vendor Indemnity Trap

If you are evaluating an agentic security tool, ask the vendor one question:

"Does this tool generate Pull Requests, or does it kill containers?"

If the answer is "Pull Requests," you are buying Verification Debt.

If the answer is "It blocks execution and triggers an automated rebuild," you are buying security.

The Contract Question No Vendor Will Sign

But here is the ultimate stress test. When a vendor pitches their "autonomous remediation agent," ask them to add this clause to the Master Services Agreement:

"[Vendor] assumes full financial liability and indemnifies the Client for any production outages, data loss, compliance violations, or security breaches caused by code generated and merged by [Vendor]'s agent."

They will refuse.

They will point to Section 12 of their Terms of Service, which states:

"The Software is provided 'as is' without warranty of any kind. User is solely responsible for all actions taken using the Software."

This is the tell. When the vendor refuses to indemnify their "autonomous worker," they are admitting what they already know:

They are not selling you an employee. They are selling you liability.

The AI generates the code. The AI opens the Pull Request. But you sign the merge. You assume the legal exposure. You get fired when the hallucination deletes the production database.

This is not a bug in the contract. This is the entire business model. The vendor captures the upside (revenue from API calls). You assume the downside (liability for the agent's mistakes).

If a vendor will not indemnify their agent's output, do not deploy it into production. Period.

The Only Exit: Garbage Collection, Not Infinite Patching

The current AI tooling thesis assumes that the remediation problem can be solved with more agents. It cannot.

You cannot "fix" your way out of exponential entropy. You must garbage collect your way out.

The Architectural Axiom: Agents Are Stochastic, State Must Be Deterministic

Here is the core rule that the YC W26 batch is violating:

Agents are stochastic. State must be deterministic.

The moment you give a probabilistic agent write-access to a mutable system, you have abandoned computer science and embraced gambling.

If an agent wants to change a system, it must:

- Write a declarative manifest (Terraform, Kubernetes YAML, Ansible playbook)

- Submit the manifest for review

- Never touch the running instance directly

We do not patch. We re-pave.

The architectural pattern that escapes the loop is Immutable Infrastructure:

- If a container is vulnerable, destroy it and redeploy a clean image. Do not patch it in place.

- If a CRM record is corrupted by an agent hallucination, restore from a known-good snapshot. Do not write a compensating transaction.

- If an API call fails semantic validation, block execution entirely. Do not generate a ticket for later review.

This is the core move that NIST, the DoD, and every cloud-native security vendor now recommends:

"Any changes to a container must be made by rebuilding the image and redeploying the new container image."

– DoD Container Image Creation and Deployment Guide

The human verification burden does not disappear. It moves to a smaller number of choke points:

- The build pipeline (verify the Golden Image)

- The provenance chain (verify the signature)

- The policy gates (verify the runtime constraints)

You still have humans verifying work. But you are verifying 10 artifacts instead of 10,000 patches.

The YC W26 Doom Loop Portfolio

- Tensol – Deploys OpenClaw agents to automate SDR workflows with unconstrained write-access

- Clam – Sells "semantic firewalls" to protect you from Tensol

- Hex Security – AI pentesting that discovers vulnerabilities continuously

- Winfunc/Corgea/ZeroPath – AI agents that generate Pull Requests to fix Hex findings

- Sonarly – Alert triage for the 5,000 daily alerts generated by Clam

- Emdash – Parallel agent coding to multiply code output

- Sourcebot – Regex search for agents to feed them more context (and generate larger edits)

All funded in the same batch. All selling to each other. All billing the same enterprise customer.

This is not a portfolio. This is a liability amplification loop.

Closing: This Is the Timestamp

OpenAI didn't hire Steinberger to make your life easier. They hired him to own the execution layer playbook before someone else did.

The framework stays open-source. The exploits keep spreading. Tensol and a dozen YC clones will keep deploying unconstrained agents. And OpenAI gets to claim they're the "responsible" ones who "hired the expert" to "ensure safe deployment."

The agents are coming. The question is not whether you will deploy them. The question is whether you will deploy them into an architecture that can survive their mistakes.

If your infrastructure is mutable, every agent is an entropy accelerator.

If your infrastructure is immutable, every agent is a build pipeline validator.

The choice is not slower AI versus faster AI. It is owned execution versus rented execution.